Here’s something that may or may not surprise you: Tesla’s Autopilot can be fooled quite easily.

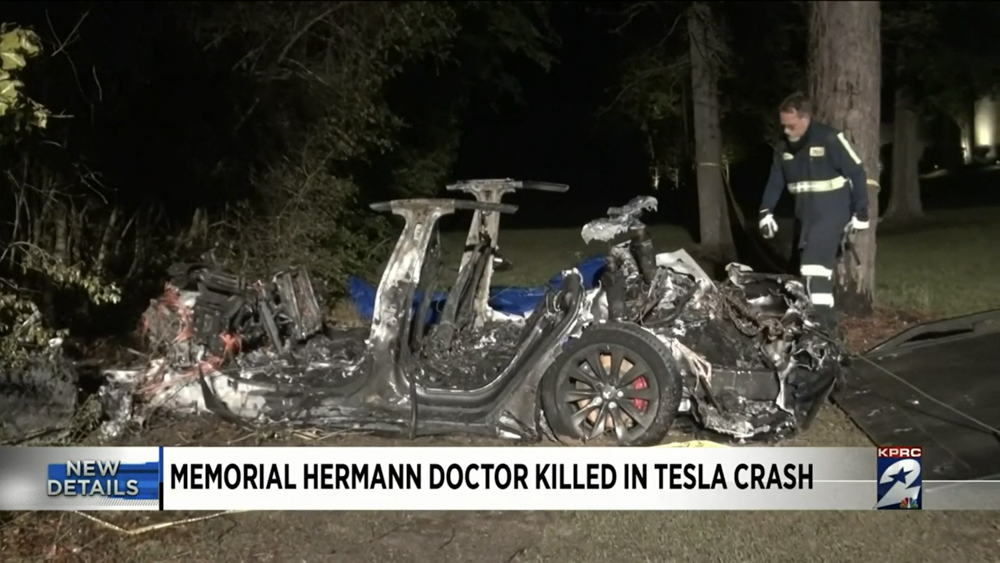

You’ve probably seen videos of or read news articles about people falling asleep at the wheel of a Tesla—and the ensuing accidents they get into. One of the most notable is a fatal mishap that happened in Texas last April 19.

A 2019 Tesla Model S was traveling at a high speed. For some reason, it wasn’t able to make a turn. It hit a tree and turned into a fireball. Authorities found that neither one of the car’s two occupants was seated behind the wheel. This accident raised a lot of eyebrows, so engineers from Consumer Reports decided to see how easy it was to trick the Autopilot self-driving system into working without someone in the driver seat.

On a closed track, Autopilot was activated while the Model Y test vehicle was in motion. The speed dial was then set to 0 (which brought the car to a complete stop) and a small weight was chained to the steering wheel. Without opening the door and unbuckling the seatbelt (since either condition will disengage Autopilot), the driver slid over to the passenger seat. Afterward, the speed dial was reset for cruising, and the Model Y set off on its own as if nothing had changed. This shows that it is quite easy to defeat Autopilot’s safeguards.

This is a bit unnerving, especially with how Autopilot primarily relied on the steering wheel simply being weighed down (hence, the requirement of keeping your hands on the tiller), with its other safeguards being fooled without difficulty. Systems employed by other automakers like General Motors, Ford, BMW and Subaru make use of cameras and sensors that monitor the driver’s attention level.

Some Tesla models do have in-car cameras, but they’re only used to record videos during a crash or an emergency stop. These clips are retrieved by the manufacturer to help it improve its self-driving system over time. Also, most driver-assist systems are classified under different levels of driving automation ranging from 0 to 5 (with 5 being fully automated). Tesla’s Autopilot is a Level 2 system, which means that it can steer or accelerate the vehicle by itself only if there is a driver ready to take over the controls at any time.

While vehicles like the Audi A8 and the Mercedes-Benz S-Class have the hardware capable of Level 3 autonomy—where the system can make decisions under the watchful eye of a human driver—such technology still isn’t legal in the US. The point of this story? Self-driving systems are only there to ease your workload, not completely take over the wheel. At least for now.

Comments